The concept of technological singularity has intrigued scientists, futurists, and philosophers for decades. It’s a term that often pops up in discussions about the future of artificial intelligence (AI) and its potential impact on humanity. But what exactly is the technological singularity, and why does it generate so much excitement and concern? In this blog, we’ll explore the meaning of technological singularity, its implications, and the debates surrounding this fascinating idea.

What is Technological Singularity?

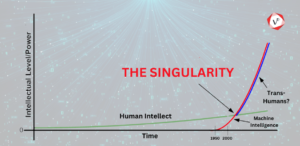

Technological singularity refers to a hypothetical point in the future when artificial intelligence surpasses human intelligence, leading to unprecedented and rapid advancements in technology. This concept was popularized by mathematician and computer scientist Vernor Vinge, who suggested that this event would profoundly change society.

The singularity represents a tipping point where AI systems become capable of improving themselves without human intervention, leading to exponential growth in intelligence and technological progress. This self-improvement loop could potentially result in machines that are far more intelligent than the most brilliant human minds.

The Origins of the Concept

The idea of the singularity has roots in various fields, including mathematics, computer science, and science fiction. British mathematician I.J. Good first introduced the concept of an “intelligence explosion” in 1965, predicting that machines with superhuman intelligence could continually improve themselves, leading to an acceleration of technological advancement.

However, it was Vernor Vinge who brought the term “singularity” into mainstream discourse. In his 1993 essay “The Coming Technological Singularity,” Vinge argued that within a few decades, we could create superintelligent entities surpassing human intellectual capacities and control.

Key Characteristics of Technological Singularity

Several key characteristics define the concept of the technological singularity:

- Superintelligence: The emergence of AI systems that possess intelligence far beyond human capabilities. These systems can solve complex problems, create innovative solutions, and understand intricate concepts at a speed and depth that humans cannot match.

- Self-Improvement: AI systems that can recursively improve themselves. Once they reach a certain level of intelligence, they can enhance their algorithms, design better hardware, and optimize their processes without human intervention.

- Exponential Growth: The rapid acceleration of technological progress. As AI systems become more intelligent, the pace of advancements increases exponentially, leading to rapid and transformative changes in a short period.

- Unpredictability: The outcomes and impacts of the singularity are highly unpredictable. The emergence of superintelligent AI could lead to positive or negative consequences, and it is challenging to foresee the exact nature of these changes.

Potential Implications of Technological Singularity

The potential implications of reaching the singularity are vast and varied. Here are some of the most discussed possibilities:

- Technological Advancements: The singularity could lead to breakthroughs in fields such as medicine, energy, transportation, and space exploration. Superintelligent AI could solve complex problems that are currently beyond human capabilities, leading to significant improvements in quality of life.

- Economic Transformation: The rise of super intelligent AI could disrupt traditional industries and job markets. While some fear mass unemployment due to automation, others believe new opportunities and industries will emerge, transforming the economy in unforeseen ways.

- Ethical and Moral Questions: The development of superintelligent AI raises important ethical and moral questions. How should we ensure that these advanced systems align with human values and goals? Who will control and govern the use of superintelligent AI?

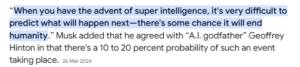

- Existential Risks: Some experts, such as physicist Stephen Hawking and entrepreneur Elon Musk, have warned about the existential risks associated with superintelligent AI. If not properly controlled, these systems could pose a threat to humanity’s survival.

Debates and Controversies

The concept of the technological singularity is highly controversial and has sparked intense debates among experts. Here are some of the main points of contention:

- Timeline: There is significant disagreement about when the singularity might occur. Some, like Ray Kurzweil, predict it could happen within the next few decades, while others believe it is much further away or may never happen at all.

- Feasibility: Skeptics argue that creating superintelligent AI may be far more challenging than proponents suggest. They point to the current limitations of AI and question whether machines can ever truly surpass human intelligence.

- Control and Safety: Ensuring the safety and control of superintelligent AI is a major concern. How can we create systems that are both highly intelligent and aligned with human values? This question remains a significant challenge for researchers and policymakers.

- Ethical Implications: The ethical implications of creating superintelligent AI are profound. How do we ensure that these systems benefit all of humanity rather than just a select few? The potential for misuse or unintended consequences is a major ethical dilemma.

Preparing for the Future

Given the potential impact of the technological singularity, it’s essential to prepare for this possible future. Here are some steps that can be taken:

- Research and Development: Continued research into AI safety and ethics is crucial. Developing robust frameworks for creating and controlling superintelligent AI will help mitigate risks and ensure positive outcomes.

- Global Collaboration: International cooperation is vital to address the challenges posed by the singularity. Collaborative efforts can help establish global standards and regulations for AI development and use.

- Education and Awareness: Raising awareness about the potential implications of the singularity is important. Educating the public, policymakers, and businesses about the benefits and risks of superintelligent AI will help create informed and proactive communities.

- Ethical Considerations: Incorporating ethical considerations into AI development from the outset is essential. Ensuring that AI systems are designed with human values and goals in mind will help align their actions with our best interests.

Final Thoughts

The concept of technological singularity, both fascinating and complex, captivates the imagination and poses significant questions about the future of artificial intelligence. While the exact timeline and feasibility of the singularity remain uncertain, its potential impact on society is undeniable. By understanding the implications and preparing for the challenges and opportunities it presents, we can navigate this transformative period with greater confidence and foresight.

As we stand on the brink of potentially unprecedented technological advancements, it’s crucial to approach the singularity with both excitement and caution. Embracing the possibilities while diligently addressing the risks will help ensure that the rise of superintelligent AI leads to a brighter and more prosperous future for all of humanity.